Master Thesis

Abstract

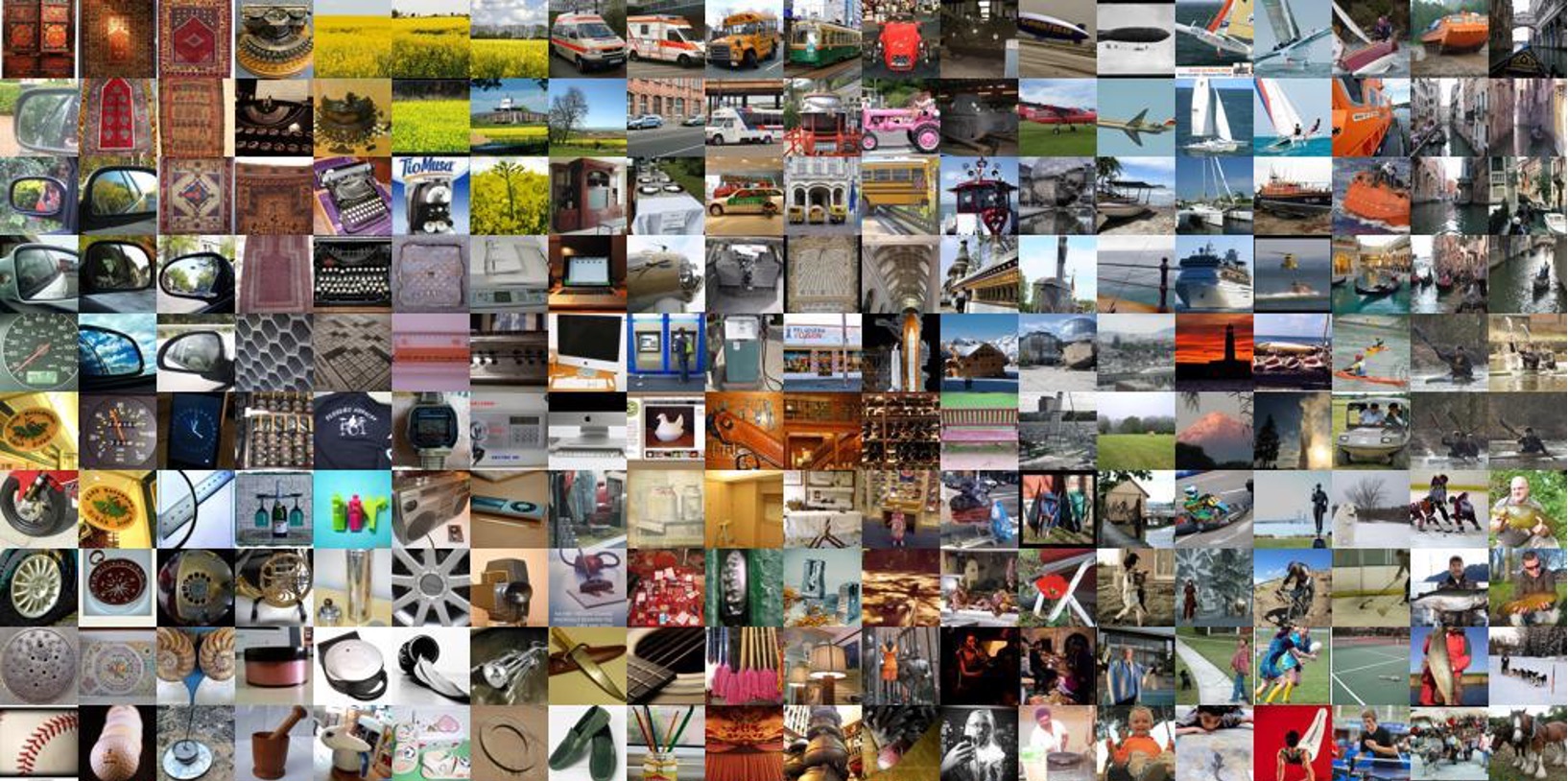

For many years, deep neural networks have achieved astonishing performance in image classification. These networks work very well when the classes during training and testing are the same. However, during deployment in the real world, often samples of previously unseen classes occur and should then be rejected. In contrast, a sample of a class seen before should still be classified correctly. This is what open-set classification is about, which artificially simulates such a realworld scenario. Traditional approaches to open-set classification are normally based on a single network that outputs probabilities over all classes. In this thesis, we take a different path and use ensembles of binary classifiers to tackle the open-set task. The multiclass classification problem is split into many binary classification problems, which are then learned separately and aggregated in the end to get a classification result. We cover the whole process, from the creation of a binary problem to training binary classifiers and evaluating them appropriately. The experiments are conducted on subsets of the ImageNet dataset, which try to resemble different levels of difficulty in their open-set task. We show that when using our binary ensemble approach, performance on the open-set task is on par with Softmax-based methods. When no negative samples for training are available, the binary ensemble does even perform better than a comparable Softmax-based approach. Achieving good performance is possible with a low number of binary classifiers.

For more information I refer to the pdf version of my thesis: Master Thesis.pdf